Write an e-mail without even touching the keyboard. Place an order and print out a shipping label just by wanting to do so. An interface for controlling machines and devices with “your brain” has made its entrance on the market. This is how it all works.

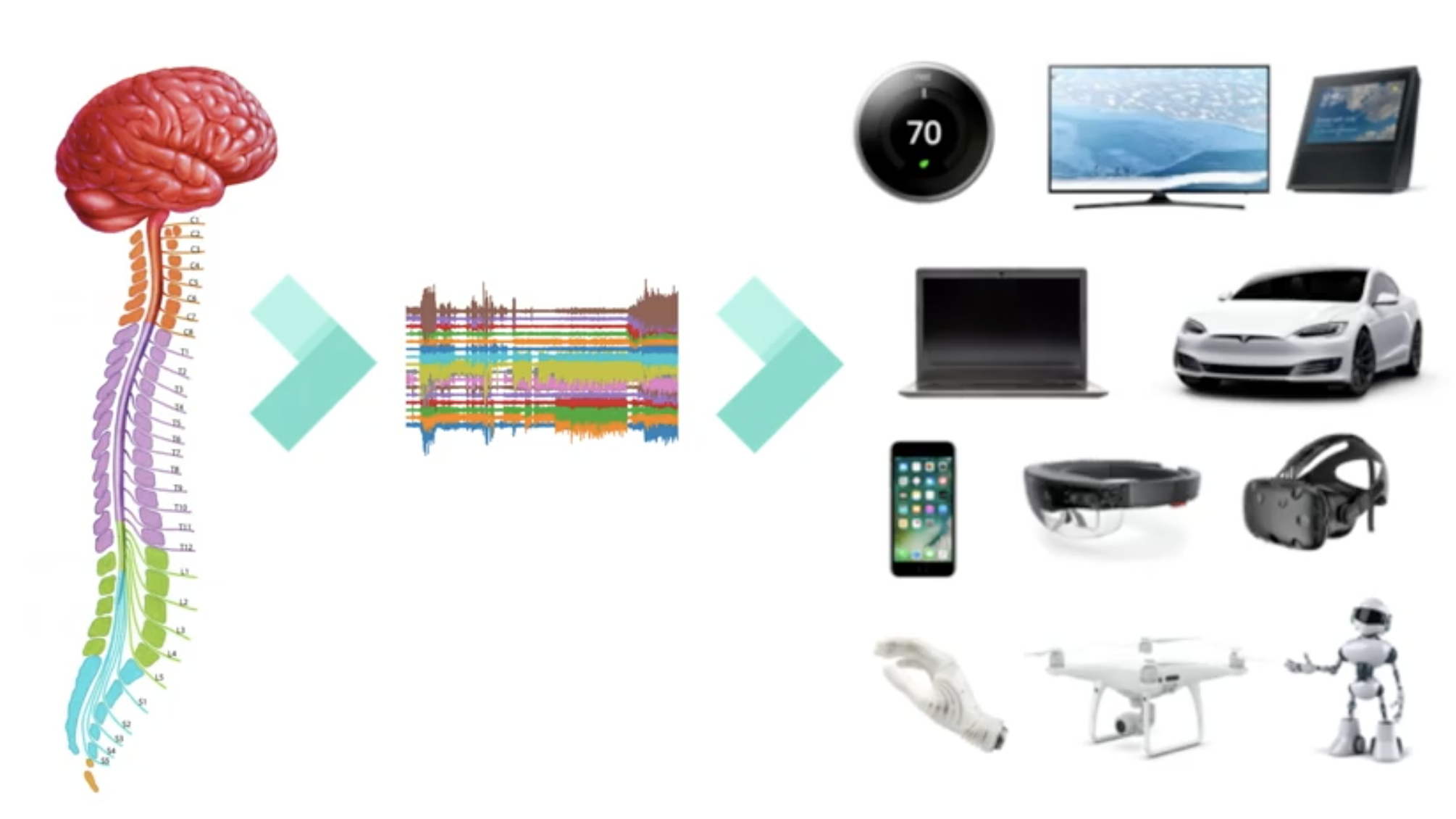

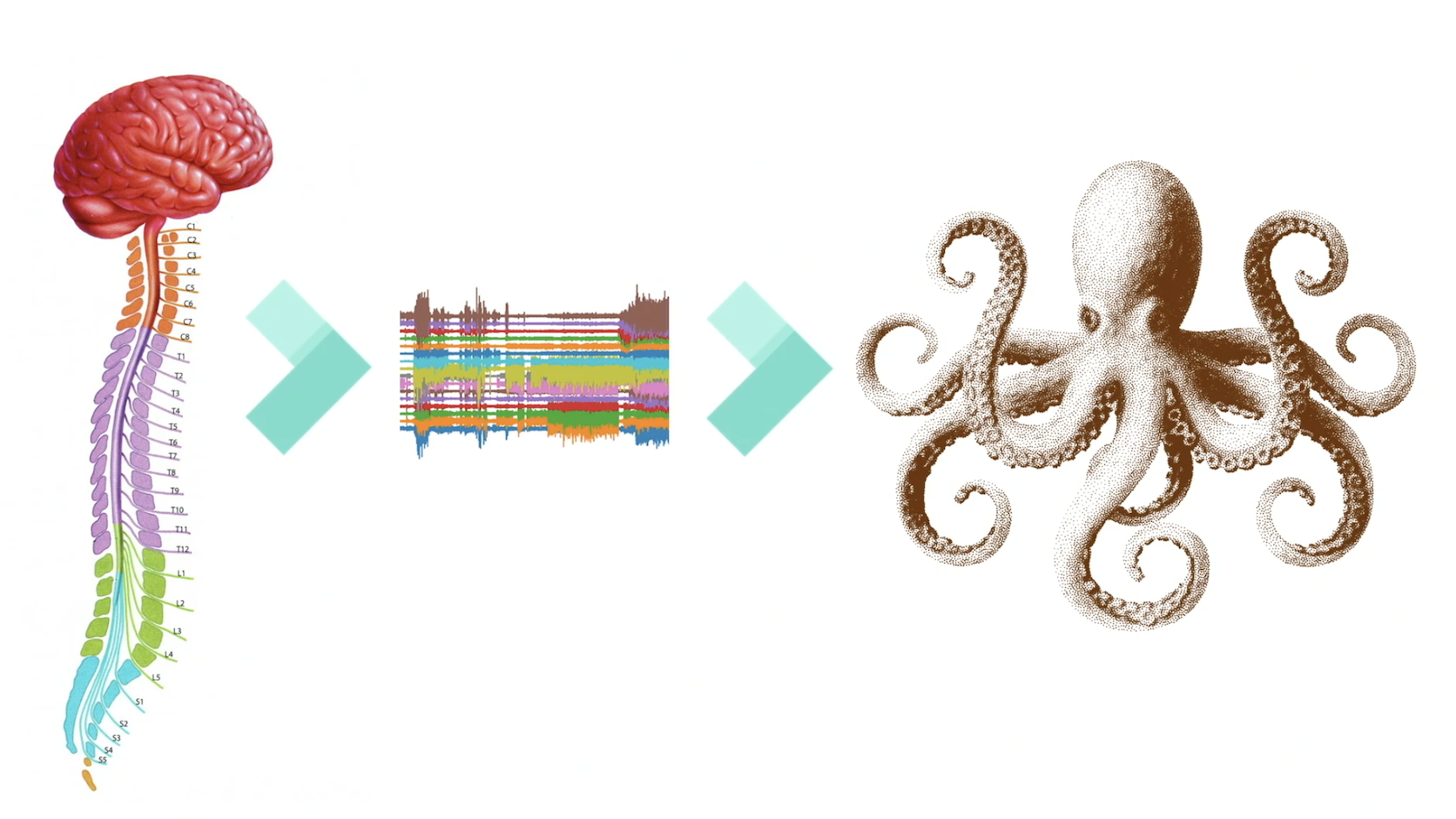

At Code 2018, Rancho Palos Verdes, California, Adam Berenzweig, Director of Research and Development at CTRL-labs, demonstrates how the company’s new Machine-Brain Interface (MBI)works. It is quite a mind-blowing demonstration. Not just because the technology—a wristband—makes it possible for you and me to interact with a cell-phone without even letting go of the steering wheel with one hand (if you are driving), or without even speaking a word (when using a voice control interface), but also because its interface and API make it possible for anyone to test what it would be like to be like an octopus and have eight arms.

All of this can be done without any medical procedures: no surgically inserted needles or electrodes placed on a carefully shaved head.

How is it possible?

Read more: Why you soon will be able to get what you want without placing an order

Expanding the Neurological Bandwidth

How can the distance between human input and human output be reduced?

Adam Berenzweig and his colleagues at CTRL-labs points to the fact that the human nervous system processes large amounts of input via all our senses every day. We do this in the blink of an eye. When it comes to output, however, we are quite slow.

The human ability to physically control “the world” is slow because our movements and muscles are slow. And, we are not Flash Gordon when controlling a device with our voices either. That is why it takes us time when interacting with our devices.

“Our output ‘bandwidth’ is smaller,” says Berenzweig.

This does seem logical and rather poetic. At least as long as you still have to reach for a mouse, swipe a screen, or lean over a control panel.

To be able to overcome this “paradox,” the people at CTRL-labs have asked themselves the following question:

How can we remove the need for an intermediate control unit, such as a touchpad, microphone, touchscreen, etc., and thereby enable direct interaction with the digital world?

Phrased differently:

How can we decode the electronic activity the cerebral cortex sends out to the muscles? In other words: How can we decode the information currency of the nervous system and use its intentions in real time?

Or, to put it in plain English:

How can we control things with our mind?

Read more: Machine learning, digital logistics, and help for the needy

The Interface That Understands Your Intentions

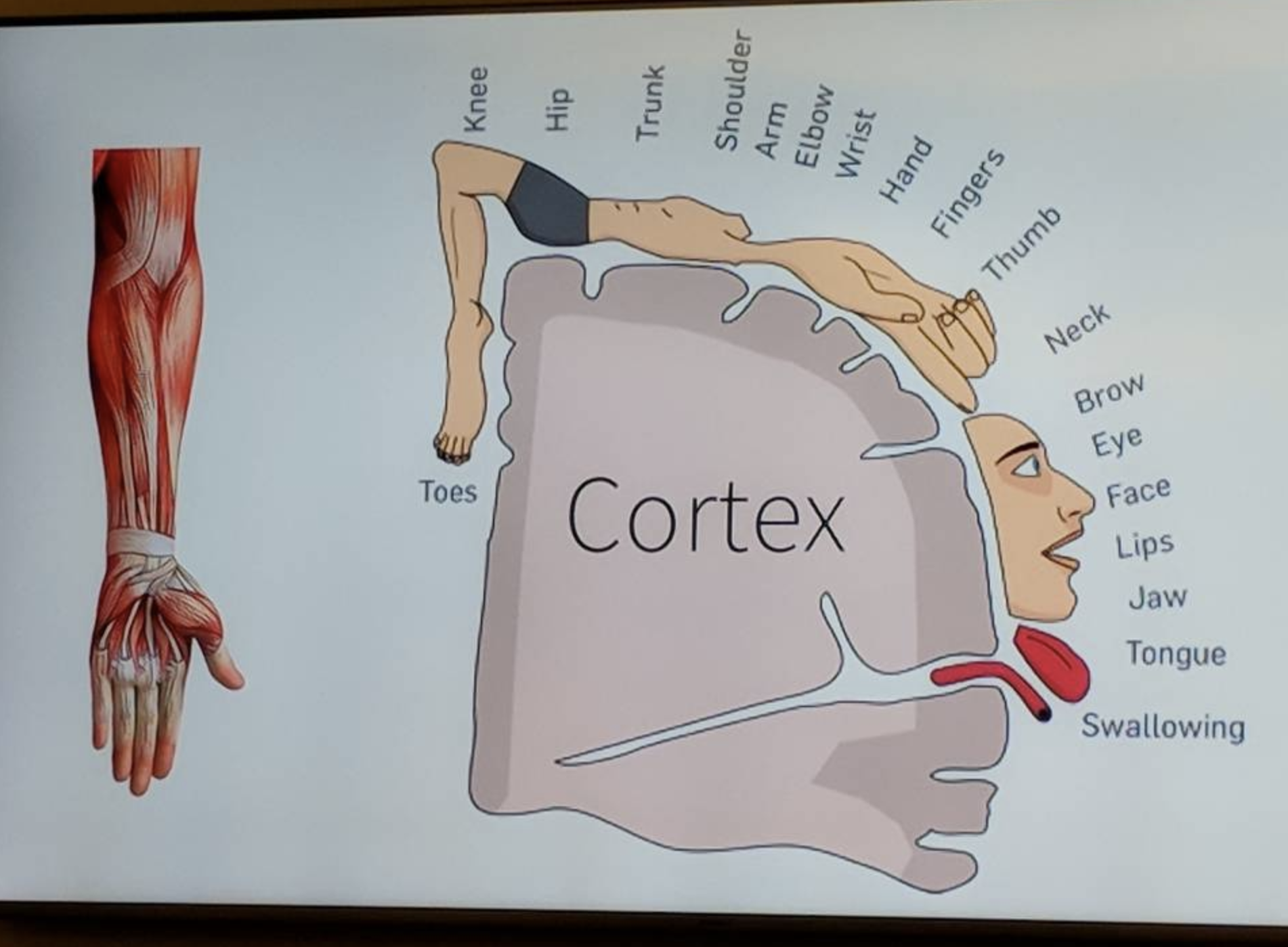

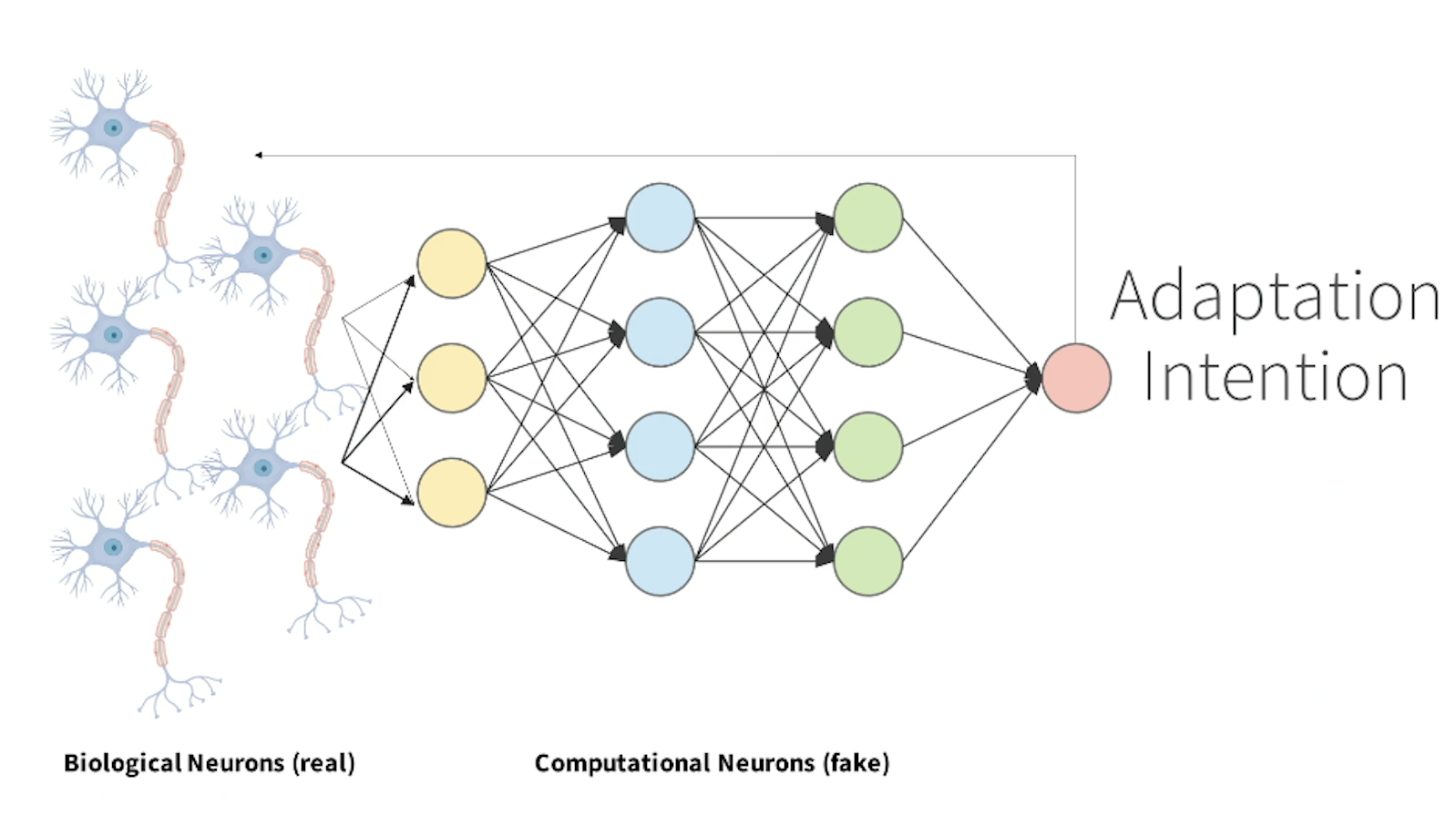

Berenzweig shows the audience the picture above and explains that they first had to decide which neurons and nerve cells they wanted to decode. CTRL-labs realized that the mouth and the hand areas are the most intensive areas in the motor cortex.

The hand, however, snags first place: The brain is wired to use the most energy to control and manipulate this part of the body. The signals that are sent to the hand (or any other part of the body) are also clearer and more reliable in their instructions compared to signals that can be measured on the head. When the signals have reached the arm and hand, their intentions are easier to read.

That is why they chose to focus on neural signals flowing to the hand. In this case, “focusing on” means, among other things, decoding individual nerve signals in the arm in real-time, meaning when they are on their way to the hand carrying information about what the hand should do. “Decoding” means understanding the signals’ intentions.

How?

Read more: This is what it looks like when voice control integrates with a system for delivery management

Muscle Control and Neuro-Control

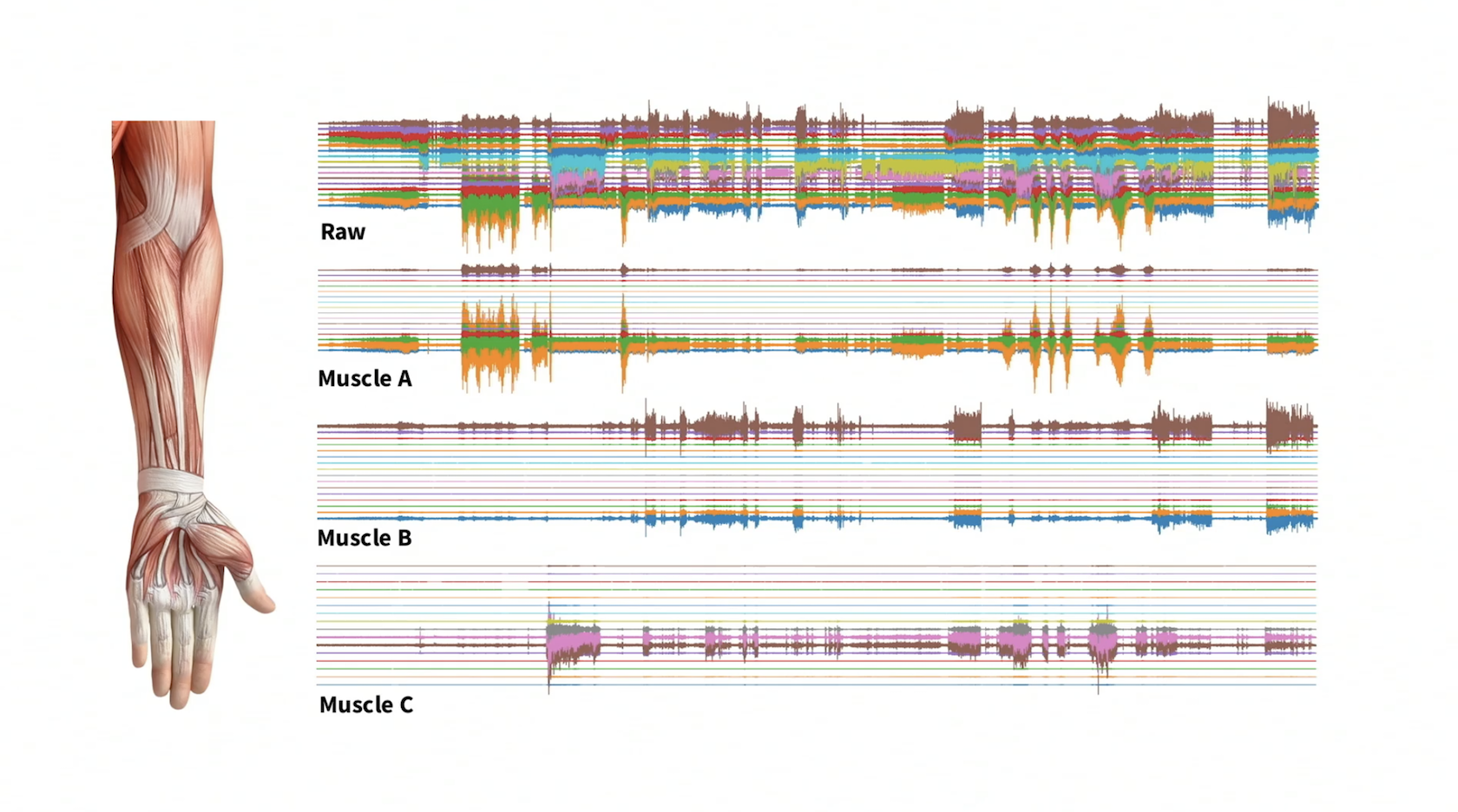

CTRL-labs decodes nerve signals with the help of a number of sensors and a type of technology that is called Surface Electromyography, Surface EMG for short.

Myocontrol means muscle control.

With the help of Surface EMG, they can take a complex brain/nerve signal and sort of translate it. It is difficult to explain exactly how it is done, but the technology is called deconvolution and is based on an advanced mathematical data-processing technique that renders different signals.

Surface EMG helps CTRL-labs understand what each and every one of the 14 muscles in the arm is trying to make the hand do, both when a person moves naturally and unnaturally. Moving unnaturally could be doing things like typing on a keyboard, clicking a mouse, using a touchscreen, etc.

Simply put, you could say that when the software understands how to interpret the signals, there is no longer a need for a keyboard, a mouse, a touchscreen, and so on.

But what is it the software understands, really? And how?

The Ones and Zeros of the Nervous System

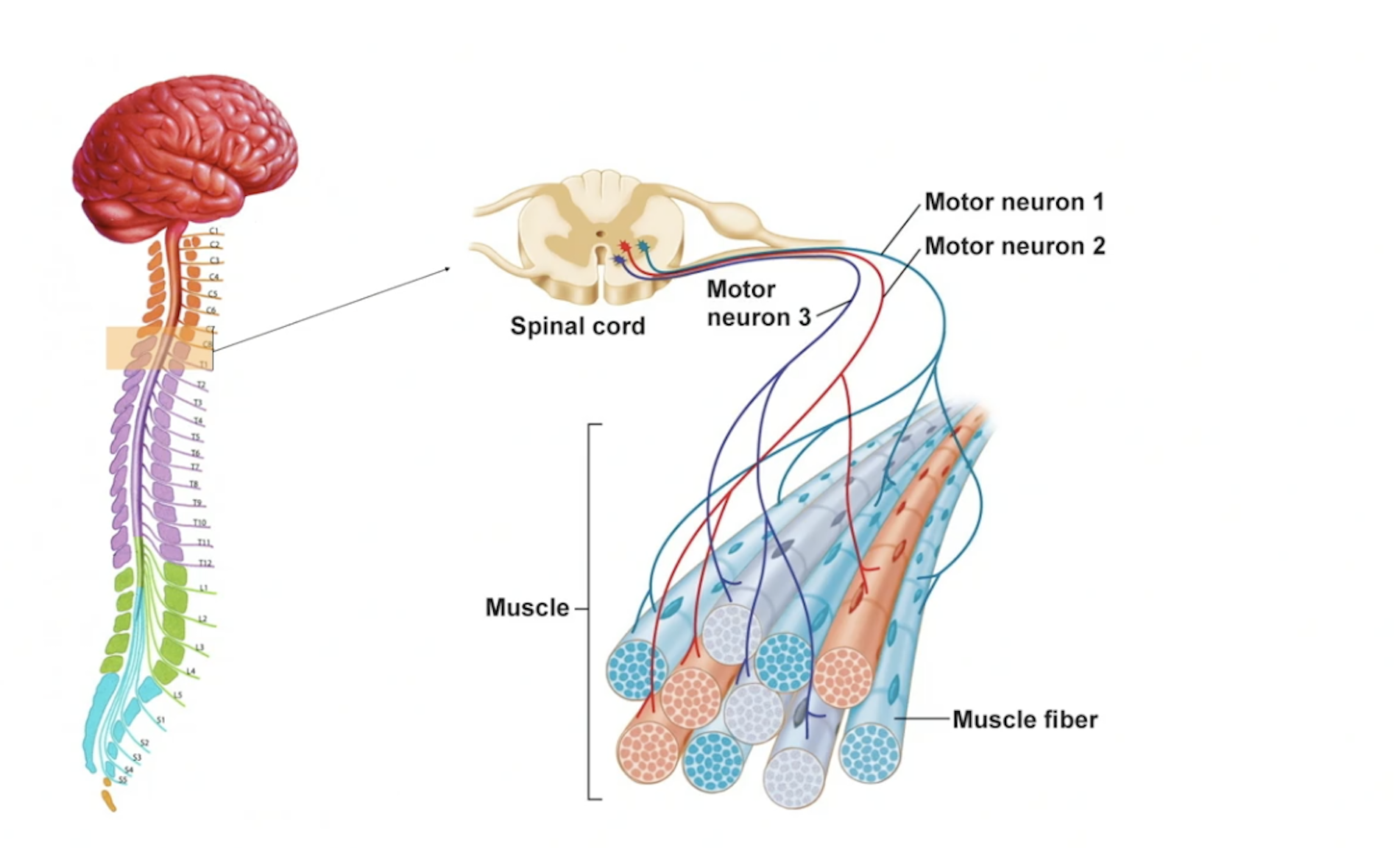

In the cerebral cortex region of the brain, there is something called upper motor neurons. They send signals to the lower motor neurons in your spine. The neurons in your spine then pass the signal on to the muscles via the long axon. Upon reaching the muscle, the “transmission” releases neurotransmitters “onto” the muscle and thus controls individual muscle fibers. In other words, it turns the individual muscle fibers on and off.

The image above shows three clusters of muscle fibers, each controlled by its own motor neuron.

CRTL-labs understands what information these action neurons bring to the muscle. With the help of its technology, CTRL-labs can decode the ones and zeros in the nervous system’s information flow. They have, in other words, reached the core of the information that the brain sends out the muscles.

Berenzweig claims that being able to understand this information is like digging out a communication signal that is just as powerful as the voice or the spoken word.

While the voice was developed in order to send information from one brain to another, the action neurons/motor neurons were developed to send information from the brain to the hand (for example). It is thanks to the hard work of CTRL-labs that these action neurons are now accessible and usable “outside” the hand for the first time.

Before CTRL-labs managed to develop a technology that could capture the signals of these action neurons, there was no way of seeing, understanding, rendering or putting them into action outside of the hand/body part. Or, as Berenzweig says in an interview:

“It’s as if there were no microphones and we didn’t have any ability to record and look at sound.”

Read more: Voice control and logistics—this is how it works

Marrying Biological Neurons with Synthetic Neurons

The fundamental question in all of this has been:

How can we control things with our mind?

The answer to the question is:

By marrying biological neurons with synthetic neurons (so-called computational neurons).

To get to this point, which is the point where they can convert a human being’s “naked intention” or “sheer will” into decoded signals that devices can understand, they had to use coding, machine learning, and neuroscience.

And then one day: Presto! Mind control/neuro-control.

The most important thing, however, is not that now, by just wearing a wristband on your forearm, you can control your cell phone without touching it with one single finger. The most important thing is that you can also, and at the same time—which is marvelous—adjust these intentions and expressions of will in cooperation with the machine. Sort of like a machine-learning dance between the device and the human being. You can choose what you want your brain signals to do in or via the device you want to control.

A Powerful API Between the Brain and the Machine

CTRL-labs has managed to minimize the distance between the brain and the machine by targeting the low-hanging fruit of the nervous system. This means the stream of signals flowing out from the brain and the spinal marrow to control the body’s muscles. Targeting these signals turned out to be a stroke of genius, both pragmatically and strategically. (More about this at the end of the article.)

The result was a powerful API between the brain and the machine.

A Wristband on the Forearm

The prototype CTRL-labs has developed to realize the technology includes, among other things, a wristband like the one you can see on Berenzweig’s arm in the film clip at the top. The wristband captures his intentions by decoding his biological neurons with the help of digital neurons.

But how do you use the wristband?

How Do You Learn to Use the Interface?

The answer to that question depends on which application you want to use. According to Berenzweig, some applications only take a few minutes for the user to grasp.

In order to get a grasp on an application, the user receives a short training session, where the user’s “output” signals and the machine’s “intake” feature get to know each other.

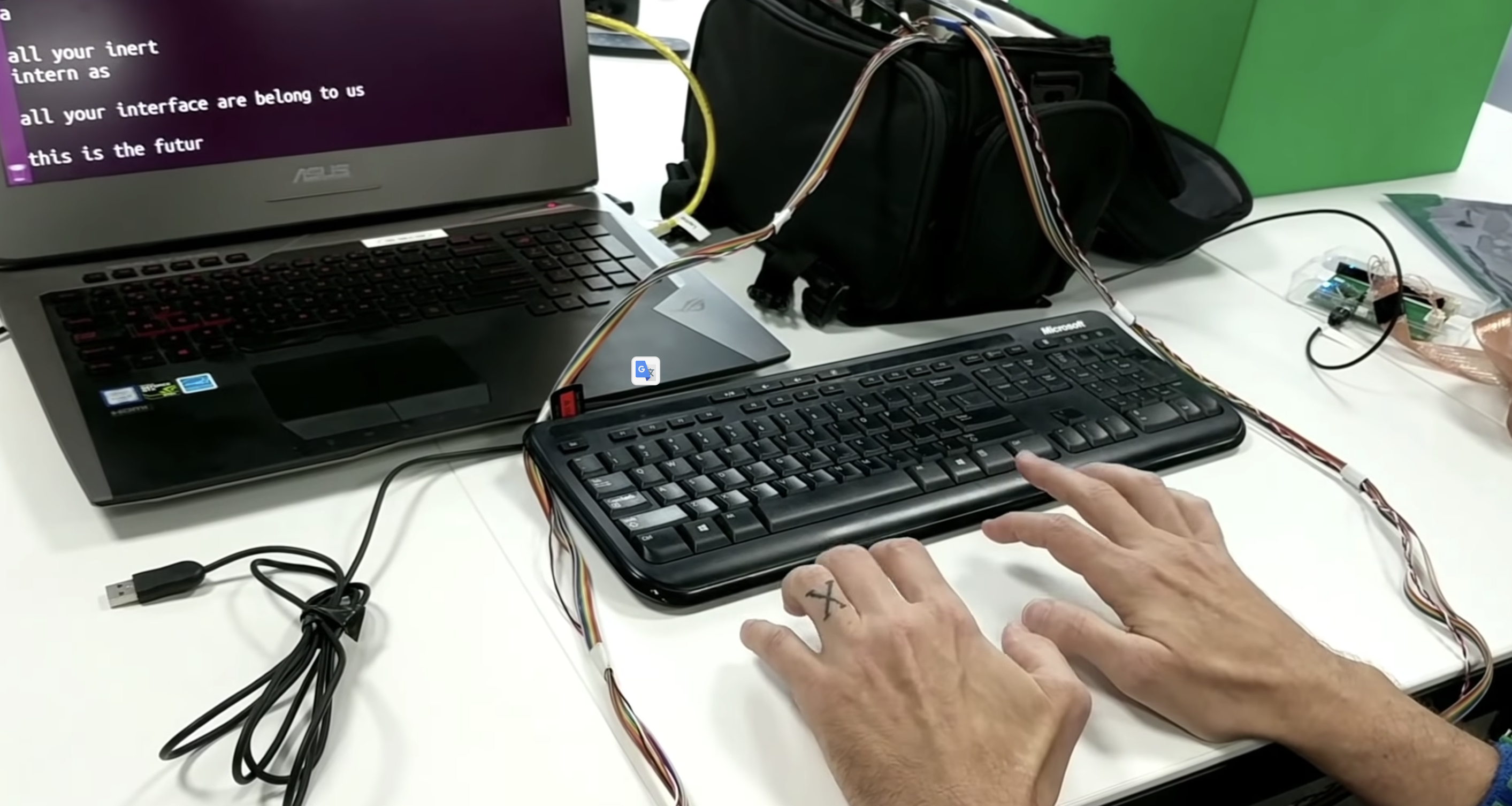

Other applications or challenges may take longer to learn how to do, such as when the user wants to do something more or something else besides imitating the actions or movements they already do. By “movements they already do,” CTRL-labs means typing on a keyboard, for example. Imitating this can be done by typing in the air, which is demonstrated in this film clip.

Or by playing Asteroids on a smartphone without moving your hand.

CTRL-labs believes that the wristband has the potential to become a new normal for how to interact with the world/machines. At the same time, the need to learn how to type on an “invisible” keyboard will disappear because other ways of writing will be developed instead.

It might, therefore, only be truly interesting when we, in time, are able to explore what CTRL-lab’s MBI can teach us about our brain and its ability.

One of the company’s advisors, John Krakauer (professor of neurology), says that the human hand is a very useful tool, but he also wonders whether our brains can handle much more complex tools than the hand:

Constant Movement is a Central Feature

Neuroscience has been divided into two areas, where one “side” focuses on what is happening inside the brain, and the other “side” focuses on the brain’s output.

CTRL-labs belongs to the latter category.

Daniel Wolpert is a prominent figure in this area of neuroscience, and his research shows that humans have a brain ONLY in order to produce complex and adaptive movements. It is only through movement that you can affect your surroundings and interact with the world.

CTRL-labs likes to emphasize that their side is the more pragmatic side. It is a movement that suits those who think that the default situation should not be a world where technology “owns” people and where focus, therefore, is placed on how to give us more control over technology right here and right now, now, NOW!

Whatever you think about this approach, it is certain that already this year, you will be able to buy a CTRL-labs kit. It undeniably appears like the team focusing on movement, and brain output has won some kind of race for finding an interface that gives us mind-over-machine control.

From the point of view of a supply chain nerd, this feels a bit like seeing your own team win. For an entrepreneur in digital logistics and logistics management, there is no doubt that flows and constant movement make up the very substance of our operations.

---- ---- ----

Read more: Logistics Is the New Black When Packages Are Digitized